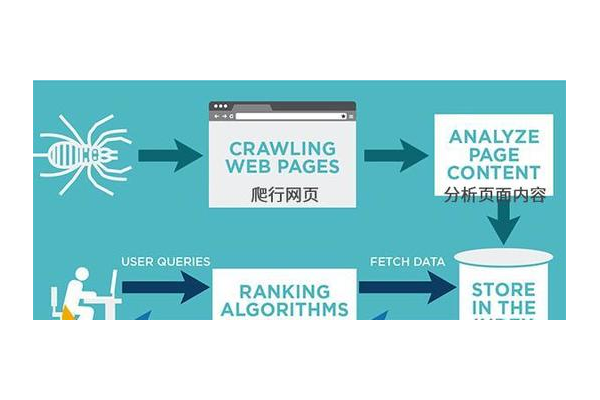

In the realm of Search Engine Optimization (SEO), the concept of a spider pool has emerged as a strategy that both intrigues and divides the digital marketing community. A spider pool in the context of SEO is a network of websites that are interconnected with the primary goal of attracting search engine spiders more frequently and consistently. Search engine spiders, also known as crawlers, are automated programs sent out by search engines like Google, Bing, and Yahoo to discover and index new and updated web pages. By creating a spider pool, webmasters aim to manipulate the crawling behavior of these spiders to their advantage.

The underlying principle of a spider pool is based on the fact that search engine spiders follow links to navigate the web. When a spider visits a page within the pool, it can easily move to other pages in the same network through the inter - connected links. This increases the likelihood that all the pages in the pool will be crawled and indexed promptly. For instance, if a new blog post is published on one of the websites in the spider pool, the spiders that are already active in the network are more likely to quickly find and index this new content. This can potentially lead to faster visibility in search engine results pages (SERPs).

One of the key benefits of using a spider pool for SEO is the potential to improve the indexing speed of new content. In the fast - paced digital world, being the first to appear in search results can make a significant difference in attracting traffic. By leveraging a spider pool, website owners can ensure that their fresh content is indexed rapidly, giving them an edge over compes. Additionally, a well - structured spider pool can help distribute link equity. Link equity, or the value passed from one page to another through hyperlinks, is a crucial factor in SEO. When pages within the spider pool are linked to each other in a strategic way, they can share this link equity, which may boost the overall authority and ranking of the websites in the network.

However, it's important to note that the use of spider pools also comes with risks. Search engines are constantly evolving their algorithms to provide the most relevant and high - quality search results to users. Some search engines view the creation of artificial spider pools as an attempt to manipulate the system. If a website is found to be using a spider pool in an unethical or manipulative way, it could face penalties, including being deindexed or having its rankings severely downgraded. For example, if the links within the spider pool are of low - quality, such as being purely for the purpose of increasing crawl frequency without adding any real value to users, search engines may detect this as spammy behavior.

Another challenge with spider pools is maintaining their effectiveness. Search engines are getting smarter at identifying and filtering out artificial link networks. As a result, webmasters need to constantly adapt their spider pool strategies to stay ahead. This may involve regularly updating the content on the websites in the pool, ensuring that the links are relevant and natural - looking, and avoiding over - optimization.

In conclusion, while spider pools can offer some potential benefits for SEO in terms of faster indexing and link equity distribution, they should be used with caution. Website owners and SEO professionals need to understand the risks involved and ensure that their use of spider pools complies with search engine guidelines. Instead of relying solely on spider pools, a more holistic approach to SEO that includes high - quality content creation, proper on - page optimization, and building natural backlinks from authoritative sources is often a more sustainable and reliable way to achieve long - term success in search engine rankings. By focusing on providing value to users and following best practices, websites can improve their visibility and attract organic traffic without exposing themselves to the potential pitfalls of using spider pools in an improper manner.

評論列表